Muon: The Magic of Three Numbers Making GPUs Go Brrr

The Muon optimization algorithm stablizes multi-million dollar GPu training run and does so on the strength of three "magic" numbers

“The coefficients (3.4445, -4.7750, 2.0315) were chosen to maximize convergence rate, with the surprising observation that empirically, ε can be as high as around 0.3 without harming the loss curve.”

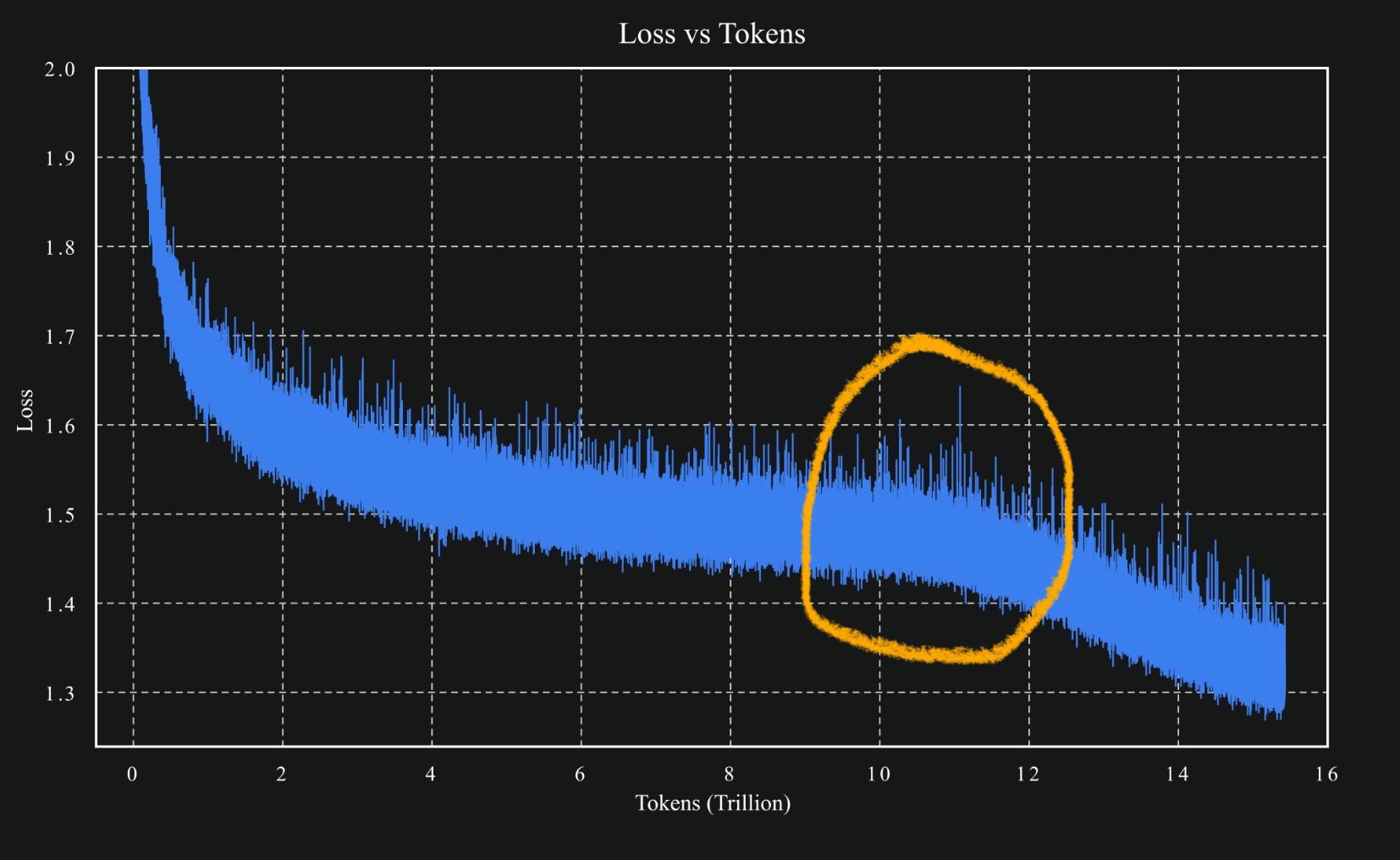

Like many, the recent success of Muon in large scale training runs for Kimi caught my attention. The loss curve plot made the rounds (for good reason):

The core insight behind Muon is elegant: instead of applying gradient updates directly, it uses Newton-Schulz matrix iteration to pseudo-orthogonalize the updates first. This approximates (key word) finding the nearest semi-orthogonal matrix to the gradient update.

Why is this useful? The blog touches on theory (rare gradient directions are less likely to get drowned out), but ultimately like most deep learning improvements: because it works.

Reading through the original Muon blog post by Keller Jordan, I was somewhat surprised to see that the “pseudo” orthogonalization relies on 3 magic numbers.

Here’s the core algorithm:

def newtonschulz5(G, steps=5, eps=1e-7):

a, b, c = (3.4445, -4.7750, 2.0315) # The magic numbers!

X = G.bfloat16()

X /= (X.norm() + eps)

if G.size(0) > G.size(1):

X = X.T

for _ in range(steps):

A = X @ X.T

B = b * A + c * A @ A

X = a * X + B @ X

if G.size(0) > G.size(1):

X = X.T

return X

These constants were derived emprically to maximize convergence rate. There’s solid mathematic reasoning behind the choice, but still. 3 numbers that work for all models? Crazy.

In many cases, magic numbers == bad. In some cases, magic numbers == stabilizing multi-million dollar GPU-go-brrr training runs.